What kind of VR glasses can confuse virtual and reality?

Zuckerberg’s VR glasses that can fool the brain look like this:

It looks no different from other VR glasses, but in fact, there are things inside. This prototype solves the four major barriers that prevent VR from being infinitely close to the real scene:

First of all, it is the problem of human eye zoom in VR virtual situations.

Generally speaking, the human eye adjusts the eyeball according to the distance of the object. If the object is close, the eye can naturally focus on it. When the object is far away from you, the focus will naturally separate. During this process, the eye needs to re-focus. Adjust to form a suitable focus.

This process is what we call vergence accommodation.

But this kind of automatic adjustment ability of the human eye is likely to collapse when it encounters a VR virtual scene.

Because of the principle of virtual reality, it is simply to show slightly biased images in your left and right eyes to create a 3D effect. The greater the deviation, the closer the object you see, but the focus of the eye is at a farther distance.

The contradiction caused by this “deception” of the eye has long caused visual convergence and adaptive adjustment disorders.

We feel dizzy and easily fatigued after wearing VR glasses, which is the ghost of visual convergence and adaptive adjustment disorders.

The second is the distortion problem.

The reason for the distortion is related to the principle of the VR glasses display system. Generally speaking, the display system of VR glasses is probably a combination of “display screen – curved lens – human eye”. The image in the display screen passes through the lens and becomes what the eye sees.

However, when the image passes through the lens, due to the refraction of light, pincushion distortion will occur. To solve this problem, it is necessary to put a barrel-shaped distortion image of the normal image on the display screen, which is the so-called anti-distortion process.

Again, it’s a matter of retina resolution.

If you want virtual reality to deceive the brain, the first thing to do is to make the picture seen by the eyes real and clear enough.

Specifically, it is the picture pixels presented to the eyes, and the pixels imaged by the eyes are close to or even consistent. The question is, what standard does this retina resolution need to meet to make it look real?

According to the current mainstream statement in the industry, 60 PPD (the number of pixels per degree of field of view) is a basic standard, but this is a threshold in a strict scene. If it is a dynamic picture, a picture of about 30 PPD can make the People can’t see the pixel particles, that is, the effect is fake.

Finally, HDR (High Dynamic Range), the range of brightness that the human eye can experience in reality, is crucial for virtual reality to simulate real scenes.

The four major thresholds are here. The solution, in short, is to use technical means to deceive people in a targeted manner.

What “deception” did Zuckerberg and the Meta team behind him use?

What is the technical support behind it?

The 4 core technologies, for the 4 major thresholds mentioned above, are only for one thing: to mix the fake with the real.

In response to the zoom problem, the Zuckerberg team took out the Half Dom (half dome) prototype that had been developed before. This prototype of the half dome technology has now launched its third generation.

From a technical point of view, Half Dome uses a polarization-dependent lens, which can be flexibly switched between different focal lengths by changing the voltage applied to the switchable plate by using its focal length according to the change of polarization state.

Simultaneously stack a series of polarization-dependent lenses and switchable half-wave plates for smooth real-world zooming.

The simulated performance looks like this:

To solve the problem of distortion, the Meta team specially developed a “distortion simulator”, which uses 3DTV to simulate the VR headset, and simulates the lens in software, so as to quickly iterate the distortion correction algorithm and verify the appropriate anti-distortion image.

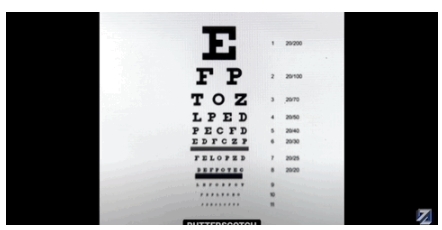

To address the visual resolution problem, Zuckerberg showed off a prototype of the latest headset, Butterscotch, with resolution data that can achieve 60 PPD, a retinal resolution that allows for 20/20 vision.

The data meets the industry standard for retina pixels for VR devices.

It is reported that this prototype of the headset uses a display with extremely high pixels, and at the same time, the field of view is improved and reduced, and finally the pixels can be concentrated in a small area.

This reduced field of view is ultimately only about half of the current Meta mass-produced product Quest 2, which is about 45 degrees above and below the level.

Finally, there is Starburst, a prototype developed by the Meta team to verify the impact of HDU on VR experience. The brightness of this prototype can achieve 20,000nit (the brightness that can be emitted per unit area).

Of course, the purpose of this prototype is not to differentiate in brightness, but to simulate various light sources in the real world, such as explosions, fireworks, glass reflections, etc., within such a high brightness range.

The last question, you may have also discovered, how to integrate so many prototypes and devices to solve the problem into a small wearable prototype Holocake 2?

At present, there are two main factors that affect the size of VR headsets: optical path length and lens width.

To reduce the optical path length, is to fold the optical path.

Specifically, the Holocake 2 uses a folded optical element that reflects light back and forth through polarization, thereby achieving path light folding and shortening the distance between the lens and the display.

The lens width is handed over to the holographic lens.

The so-called holographic lens is a holographic film, which can achieve the same effect as a lens in function. The biggest feature is that it is “thin” enough.

On this basis, the distance from the eyes to the display can be greatly reduced, so as to achieve the purpose of miniaturization.

However, it should be noted here that this prototype is still a long way from commercialization.

How big is this distance?

Zuckerberg didn’t say it, but at least not this year.

According to the official statement, the prototype technology displayed by Holocake 2 is not yet mature. The high-end head display Cambria, which is launched by Meta this year, may use a folding optical system.

But there is still a long way to go to fully realize the integration of many technologies.

GIPHY App Key not set. Please check settings