Robots can be programmed to lift a car and even help with some surgery, but when it comes to picking up an object they haven’t touched before, like an egg, they tend to fail miserably. Now, engineers have come up with an artificial fingertip to overcome this limitation. This advance enables machines to perceive the texture of these surfaces like a human fingertip.

Mandayam Srinivasan, a touch researcher from UCL, said researchers were “bringing the realm of natural and artificial touch closer…a necessary step towards improving robotic touch”. It is reported that he was not involved in the work.

Engineers have long sought to make robots as dexterous as humans. One way is to equip them with artificial nerves. But “the current state of robotic haptics is often far inferior to human haptic capabilities,” Srinivasan said.

So when researchers at the University of Bristol set out to design artificial fingertips in 2009, they used human skin as a guide. Their first fingertips were hand-assembled and about the size of a soda can. By 2018, they switched to 3D printing. This makes it possible for them to make the fingertip and all its parts the size of an adult’s big toe and to more easily create a series of layers that approximate the multi-layered structure of human skin. More recently, scientists have put neural networks at their fingertips, which they call TacTip. These neural networks help the robot quickly process what it senses and react accordingly — seemingly like a real finger.

At our fingertips, when the skin touches an object, a layer of nerve endings deforms and tells the brain what’s going on. These nerves send “fast” signals to help us avoid dropping things, or “slow” signals to convey the shape of an object.

The TacTip’s equivalent signal comes from a row of needle-like protrusions under the rubber’s surface that move when the surface is touched. The needles of this array are like the bristles of a brush: hard but flexible. Beneath this array, among other things, is a camera that detects when and how the pins move. The degree of bending of the pin is responsible for the slow signal, and the bending speed is responsible for the fast signal. The neural network translates these signals into fingertip movements, such as making it more gripping or adjusting the angle of the fingertip.

“A lot of our sense of touch is made of mechanical structures (of the skin),” said Sliman Bensmaia, a neuroscientist at the University of Chicago who studies the neuronal basis of touch. “What this approach does is really solve that problem.”

In the new work, University of Bristol engineer Nathan Lepora and colleagues tested the artificial tip in the same way that researchers assess a person’s sense of touch. They measured the camera’s output when the fingertip touched a corduroy-like material with gaps and ridges of varying heights and densities. The team reports in the Journal of the Royal Society Interface that not only was the artificial fingertip able to detect crevices and ridges, but its output closely correlated with neuronal signaling patterns in the same test human fingertips.

However, artificial fingertips are not as sensitive as the real McCoy. Humans can detect a gap as narrow as the lead of a pencil, while a TacTip needs to be twice as wide to notice it, Lepora points out. But he thinks it will improve when he and his colleagues develop thinner outer surfaces.

In the second project, Lepora’s team added more pins and a microphone to the TacTip. The microphone mimics another set of nerve endings deep in our skin that sense vibrations when our fingers pass through a surface. These nerve endings are known to enhance our ability to sense the roughness of a surface.

When the researchers tested the enhanced fingertip’s ability to discriminate between 13 fabrics, the microphones did the same. Again, the signals from the microphone and camera mimic those recorded by a human finger while doing this test, Lepora noted.

These studies impressed Levent Beker, a mechanical engineer working on wearable sensors at Koç University. “A robotic hand can (now) perceive pressure and texture information similar to a human finger.”

“It’s a really interesting approach, and I don’t think anyone has taken this approach,” Bensmaia adds. “It’s pretty cool.” However, the signals from artificial and natural fingertips aren’t exactly the same, because the real Signaling in the skin is more intense. It’s only moderately skin-like. ”

Still, Bensmaia thinks the fingertips could help robots detect, pick up and manipulate objects. The deformable rubber fingertips should give the bionic hand a leg, or an advantage over current devices with rigid metal fingers and toes, he points out.

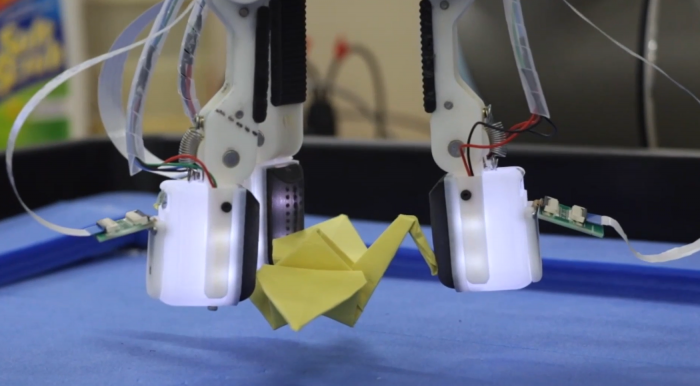

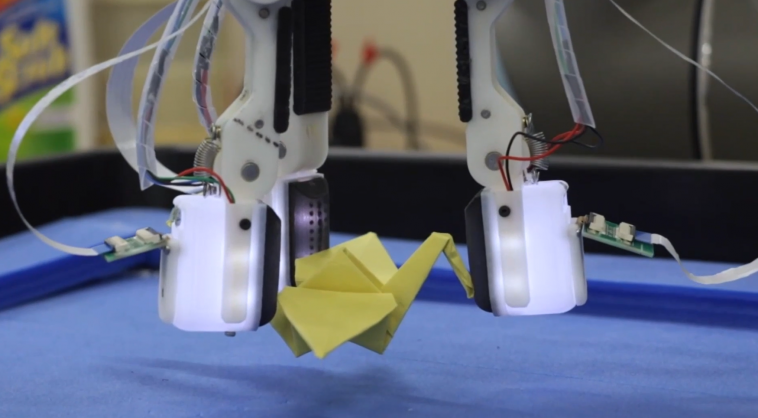

Today’s robots must be precisely programmed to fit a specific car part, and they, like hand protection, struggle to grip hard objects like pens or toothbrushes. Lepora points out that fingertips like the TacTip could allow robots and prosthetics to handle objects of all shapes and sizes without needing to be transformed. But Bensmaia notes that it’s unclear how much it can be miniaturized.

Lepora is optimistic about shrinking TacTip. Cameras and microphones have been getting smaller in size, and improved 3D printing technology is enabling thinner layers. Both he and Bensmaia believe that such smaller devices may be closer to human “feelings” because they would be more dexterous by being able to detect finer textures.

At a fundamental level, this research is helping to show how human touch works, says materials scientist Robert Shepherd of Cornell University. Lepora and his colleagues have basically figured out how nerve endings in the skin translate their sensations to enable fingers to grab a ball that slips through our fingers or pick up an origami crane without holding it, he says. Flatten.

GIPHY App Key not set. Please check settings